Querying AI symposium asks how AI may shape research, society, and democracy

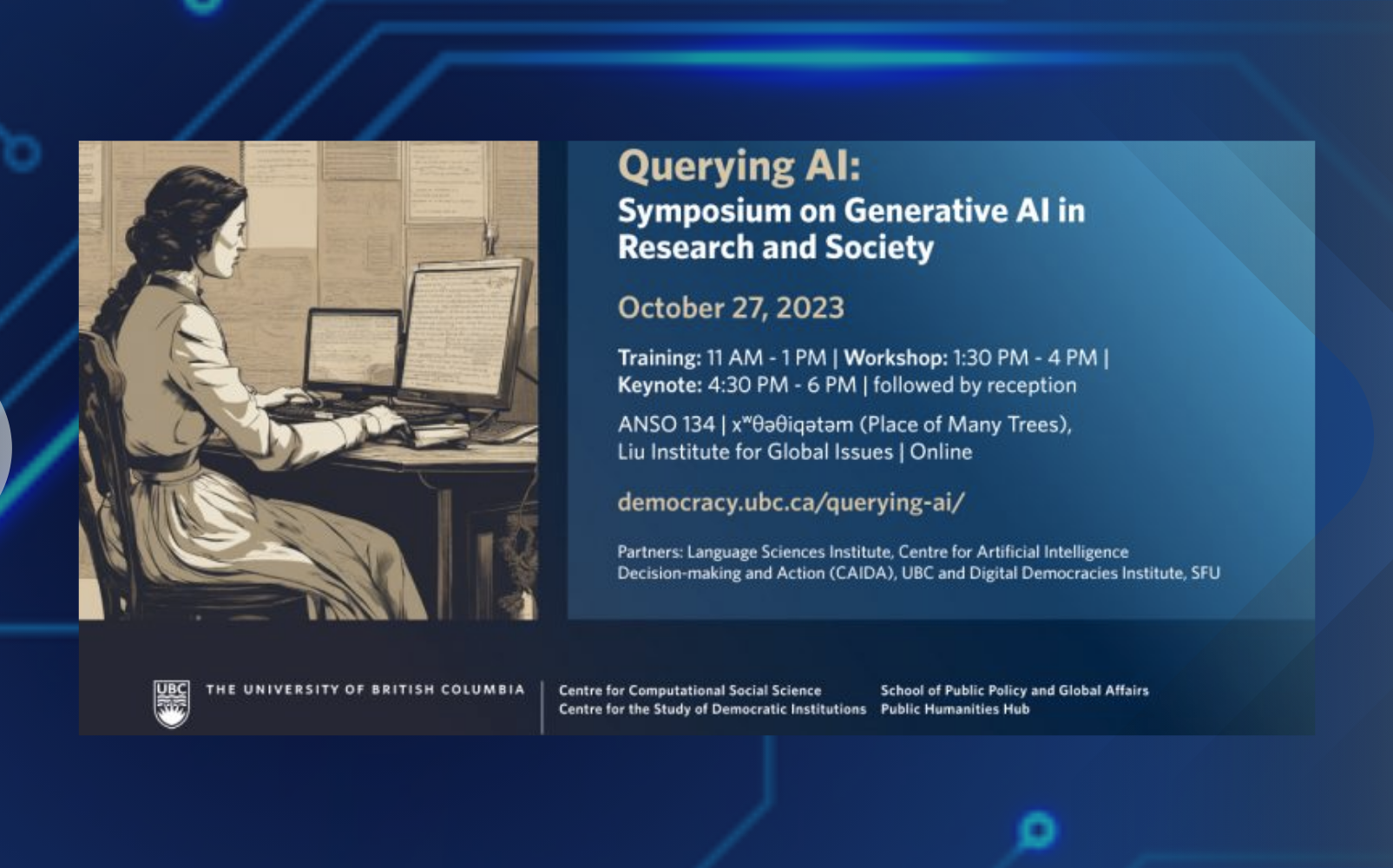

On October 27, the symposium Querying AI: Social Science and Humanities Perspectives on AI in Research and Society brought together faculty and students from UBC departments to engage in training and critical discussions.

The symposium addressed three broad questions:

- How can social science and humanities researchers use generative AI tools to better understand society, or to make ourselves better, more productive scholars?

- What ethical, legal, institutional, and social justice challenges arise from using AI, and how can we individuals and university communities use these tools responsibly in our research, scholarship, and teaching?

- What impact will generative AI have on society and politics, and in particular on our democratic systems?

Generative AI in Research

To start the day, a training session walked participants through how to train a language model in Python and R, demystifying some of the technical aspects of language models. Workshop participants explored two word embedding models trained on 18th and 19th century English-language literature, looking in particular at gender stereotypes embedded in these novels. Women, we learned, are lovable, captivating, and bewitching, while men spit, roar, and growl.

Students, faculty, and researchers join the Querying AI symposium on Oct. 27, 2023 |

In the first workshop panel, How Can Humanities and Social Science Researchers Use Generative AI?, panelists focused on two main uses, (1) as a tool to study society, and (2) as a digital research and writing assistant.

Laura Nelson (Director, Centre for Computational Social Science; Assistant Professor of Sociology, UBC) explained how researchers can use large language models to identify patterns in text and society, such as the moral valence associated with words like “healthy” or “overweight.” This research shows how these models reveal perspectives embedded in data, enabling us to study their dynamics.

Ethan Busby (Assistant Professor of Political Science, Brigham Young University) described himself as “a reluctant friend to AI,” due to his concerns about some AI uses. However, over the last three years he has used AI tools to study and improve political discussions. His top tips for scholars:

- Work in interdisciplinary teams. You don’t need to know it all!

- Generative AI is not just ChatGPT! Explore different Generative AI/Large Language Models to find ones that work best for your research question.

- Reproducibility is hard to achieve when using AI tools, making transparency in one’s approach even more critical.

Dongwook Yoon (Associate Professor of Computer Science, UBC) described five ways to use generative AI as a research and writing assistant, focusing on GPT 4. He ranked them from least to most risky:

- Copy editor: use tools to proofread and tweak your writing.

- Reviewer 2: Ask GPT4 to find logical leaps or holes in the argument of writing you have already done.

- Generative idea creator: Begin with a topic and initial ideas, and use AI to help identify further ideas that may be worth pursuing.

- Writer’s block beater: Get past “blank page syndrome” by having an AI system write a first paragraph or outline, based on your own initial thoughts.

- Ghost-writer: Ask the AI system to compose writing for you, including both ideas and style. Highly risky. Not recommended!

Dilemmas of ethics, justice and law

The second workshop panel, Dilemmas of Using Generative AI in the Academy, turned to challenges in the areas of ethics, social justice, and law. Moderator Chris Tenove ((Interim Director, Centre for the Study of Democratic Institutions;) invited each panelist to describe a dilemma and provide some thoughts on how it might be addressed.

Laurie McNeill and Graham Reynolds at the ethics, justice, and law workshop session, with Ife Adebara joining online |

Ife Adebara (PhD candidate, Linguistics, UBC) drew attention to the fact that most AI development is dominated by English and other well-resourced languages. For her PhD at UBC, she is developing resources for indigenous languages in Africa. She argues that developing tools for under-resourced languages not only requires resources and technical capacity, it also requires careful attention to communities’ needs, including their desire for privacy and protection.

Laurie McNeill (Associate Dean, Students; Professor of Teaching, English Language and Literatures, UBC) asked the provocative question, What happens when we use AI systems to ‘author’ human experiences? Reflecting on the use of AI tools for scholarly work, personal profiles, and even autobiographies, she highlighted implications for how we think about authenticity and creativity when using writing tools including generative AI.

Graham Reynolds (Associate Dean, Research and International; Associate Professor, Peter A. Allard School of Law, UBC) discussed the thorny intellectual property issues posed by generative AI. What happens when people’s creations are slurped into the training data that powers generative AI? He discussed challenges such as re-evaluating what “fair use” of creative works means for AI systems, and broader impacts to the creative economy if people increasingly turn to AI systems rather than human artists and writers.

Can Democracy Survive AI?

In the keynote panel, moderated by Dr. Tenove, two leading thinkers on digital politics in Canada discussed the historical trajectory and contemporary risks and opportunities for AI in politics.

Fenwick McKelvey (Co-Director, Applied AI Institute; Associate Professor in Information and Communication Technology Policy, Concordia University) reminded us that algorithmic tools have for decades been considered the “new magic” in politics. However, right now “is an incredible time to care and be involved,” he said, as Canada and governments around the world are developing new AI policies. Dr. McKelvey pointed out some key aspects that could be improved in Canada’s proposed Bill C-27, including better data privacy regulation for political parties and clearer attention to potential systemic harms that AI could pose to democracy.

Elizabeth Dubois (University Research Chair in Politics, Communication and Technology; Associate Professor of Communication, University of Ottawa) , shared examples from her lab’s forthcoming report, The Political Uses of AI in Canada, including a synthetic election video by the Alberta Party and deepfakes of political leaders, but also positive uses like Environmental Defence’s Federal Oil & Gas Lobbying Bot and an electoral information chatbot created by the City of Markham. On the question “can democracy survive AI?,” Dr. Dubois said the real question should be, “‘can democracy that is equitable and inclusive and not discriminatory be generated through the use of AI?’ That’s what I’m interested in, and I don’t know what the answer is. But I think the answer depends more on people than the tool itself.”

Watch the full public keynote here:

Want to dive in yourself?

The symposium included a training session on word embeddings, one of the technologies underpinning generative AI. See all the training session materials here, including how you can run the code yourself on OpenJupyter or Google Collab: https://github.com/ubcecon/ai-workshop.

Further Resources

- Leveraging AI for democratic discourse: Chat interventions can improve online political conversations at scale, an article co-authored by panelist Ethan Busby

- Panelist Dongwook Yoon explores the risks of digital doppelgangers

- Communicating with students about Generative AI, including syllabus guidelines by Dongwook Yoon

- Towards Afrocentric NLP for African Languages: Where We Are and Where We Can Go, an article co-authored by panelist Ife Adebara

- Arts Perspectives on AI: Faculty discuss integrity and innovation, UBC panel on Oct 25, 2023, featuring panelist Laurie McNeill

- The report that Elizabeth and Fen worked on

Event Organizers and Partners

Organizers:

Centre for the Study of Democratic Institutions (CSDI), UBC

Centre for Computational Social Science (CCSS), UBC

Partners:

Language Sciences Institute, UBC

Centre for Artificial Intelligence Decision-Making and Action (CAIDA), UBC

Faculty of Art

Faculty of Art